Machine Learning for Safety-Critical Applications: Opportunities, Challenges, and a Research Agenda (2025)

Chapter: 4 A Research Agenda to Bridge Machine Learning and Safety Engineering

4

A Research Agenda to Bridge Machine Learning and Safety Engineering

As discussed in Chapter 3, extending safety engineering to cyber-physical systems that include artificial intelligence (AI) components brings a new set of challenges that suggest changing how this engineering is conceived, approached, and carried out. This chapter presents a research agenda to address the research areas and challenges identified in Chapter 3.

Finding 4-1: Safely integrating machine learning (ML) components into physical systems requires a new engineering discipline that draws from ML and systems engineering, and a new research agenda in which ML researchers adopt a broader systems perspective and safety engineers embrace the distinctive attributes of ML.

4.1 DATA ENGINEERING TOOLS AND TECHNIQUES

ML components are specified by their training data. Whereas the dominant methodology in ML today assumes that the data have already been collected, the safety-engineering approach requires the specification of requirements for data collection and data quality. This is often an iterative process in which an initial set of data are collected and then analyzed to understand the structure of the environment and its variability.

Tools are needed for understanding and visualizing the dimensions of variability of the data. Classical tools, such as principal component analysis, provide a start, but additional tools are needed that can characterize variability at multiple levels of abstraction, from high-level semantics to low-level signals. This bottom-up analysis must be

combined with top-down analysis of the operational domain and the potential sources of variation. Tools are also needed to support scenario generation. These can build on existing design tools in safety engineering.

Finally, tools and methodologies are needed to drive the data collection process from the data specification. This includes tools for experiment planning to collect data and tools to support high-quality human annotation. Data may be collected in the physical world, or it may be generated via simulation. Generative foundation models, such as large vision-language models and diffusion models, can also contribute to generating training data.

As discussed in Chapter 3, the goal of collecting or generating data for novelty detection raises additional problems because it can be difficult to anticipate the ways in which novelty may enter the operational domain. This is a key challenge for designers of systems that operate in open worlds.

Data generation for reinforcement learning and control is often conducted in two phases. First, humans can manually operate a system to collect examples of good state space trajectories. Second, one or more initial controllers can collect additional trajectories to enhance the diversity and coverage of the training data. Safe data collection may require building specialized test ranges or high-fidelity simulations.

Data generation for user modeling is even more challenging and nearly always requires an iterative or spiral development methodology. Initial data collection may rely on “Wizard of Oz” studies (where research subjects interact with a system operated by an unseen human) combined with a collection of “think aloud” data (where research subjects vocalize their thoughts as they interact with a system). These data can then be annotated with (guesses about) the knowledge state of the user. Early versions of the system can drive subsequent rounds of data collection and user modeling. Scenario-based design is particularly important in user modeling to map the various ways in which the user’s model of the environment and system state may diverge from reality. These divergences then drive the design of the user-system interaction with the goal of bringing the two into alignment so that users can make correct decisions.

Research Gap 1: New and improved tools are needed for specifying data requirements for ML components and for collecting data to meet those requirements.

4.2 BENCHMARK DATA SETS FOR SAFETY-CRITICAL MACHINE LEARNING

The creation and publication of data sets has been an important driver of progress in ML. For example, the ImageNet data set spurred the computer vision community to develop

the deep learning methods that have revolutionized the field. Today, few public data sets exist, and only for very few safety-critical systems applications, while many are needed to advance the state of art for different ML types and application domains. Each such data set should partition the task into scenarios and quantify the severity of harms that could result within each scenario. Given a range of probability distributions over the scenarios, the learning task would be to train an ML component to reduce the risk (i.e., the product of harm and probability) below a desired risk tolerance over all such probability distributions. This might require methods that adapt to the distribution of the scenarios in real time.

Benchmark data sets are needed for all five ML component types (supervised, novelty detection, operational domain (OD) detection, reinforcement learning, and user modeling).

Research Gap 2: Multiple benchmark data sets and learning tasks are needed to advance research on ML-enabled safety-critical systems.

4.3 LEARNING ALGORITHMS AND LEARNING THEORY FOR SAFETY-CRITICAL MACHINE LEARNING

Traditional ML has assumed a single, stationary distribution over the input space and the system dynamics. This has produced learning algorithms (and elegant theory) that break down when the distribution changes, as it inevitably does in real applications. Safety-critical learning requires a model that is robust to distribution changes. This is related to the problem of distributionally robust learning, but further work is needed to place it within a risk framework.1 A drawback of seeking a single robust solution is that the fitted model may be extremely conservative, and hence, perform poorly.2

Algorithm development and theoretical analysis should explore methods that combine robustness with real time adaptation. Techniques such as mixing multiple models or switching between them are likely to achieve better performance.

Research Gap 3: New learning algorithms and associated theory are needed for scenario-based safety-critical ML.

___________________

1 R. Chen and I.C. Paschalidis, 2020, “Distributionally Robust Learning,” National Science Foundation: Foundations and Trends in Optimization 4(1–2):1–243.

2 X. Xie, K. Kersting, and D. Neider, 2022. “Neuro-Symbolic Verification of Deep Neural Networks,” arXiv preprint arXiv:2203.00938.

4.4 DETECTING AND PREVENTING SHORTCUT LEARNING

As discussed in Chapter 3, a pervasive risk of statistical learning is the reliance on non-causal (spurious) correlations between the input data and the output targets. The research community is exploring several avenues to detecting such cases (known as learning “short cuts”). If the spurious correlations can be anticipated, the training data can be engineered to disrupt the correlations so that they cannot be exploited by the learning algorithm. However, in safety-critical systems, the goal is to guarantee that all learned statistical relationships have a causal basis. The underlying theory of causality has matured, and recent advances have shown how to learn causally sound representations.3,4 These methods often place additional requirements on the training data, such as controlled interventions, that may be difficult to satisfy in safety-critical applications.

Research Gap 4: Research is needed to develop practical methods of preventing—or at least detecting—the learning of non-causal relationships.

One very important line of work focuses on producing explanations for the outputs of an ML component. Such explanations show which features or image regions provided the basis for the output.5,6 Another form of explanation, or attribution, seeks to identify the training examples that influence the decision. Both forms of explanation can be viewed as “data debuggers” for discovering and repairing spurious correlations. However, like debuggers, they are labor-intensive, and more scalable methods need to be developed.

Research Gap 5: Scalable methods are needed for explanation and attribution of ML predictions.

4.5 UNCERTAINTY REPRESENTATION AND CALIBRATION

Today’s ML systems usually predict either a class label or a real-valued response. Uncertainty is typically represented as a probability distribution over class labels or as a

___________________

3 J. Pearl, 2009, Causality: Models, Reasoning, and Inference, Cambridge University Press, 2nd Edition; J. Pearl and D. Mackenzie, 2018, The Book of Why, Basic Books.

4 Causal Representation Learning, 2023, “NeurIPS Workshop,” https://neurips.cc/virtual/2023/workshop/66497.

5 M. Ancona, C. Öztireli, and M. Gross, 2019, “Explaining Deep Neural Networks with a Polynomial Time Algorithm for Shapley Values Approximation,” arXiv preprint arXiv:1903.10992.

6 S. Khorram, T. Lawson, and L. Fuxin, 2021, “IGOS++: Integrated Gradient Optimized Saliency by Bilateral Perturbations,” Proceedings of the 2021 ACM Conference on Health, Inference, and Learning 174–182, https://doi.org/10.1145/3450439.3451865.

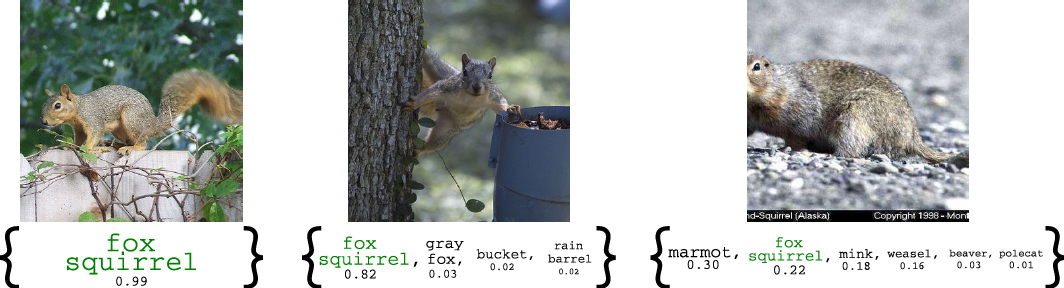

probability distribution (e.g., Gaussian) around the real-valued response. More sophisticated methods provide a prediction interval. A prediction interval for a classifier is a set of class labels along with a guarantee that the true label is inside the set with a specified probability, say 0.99. Figure 4-1 show examples of 99 percent prediction sets for three images computed via the method of conformal prediction. In all cases the true label, “fox squirrel” is in the prediction set.

For real-valued responses, the prediction set is a prediction interval on the real line. For example, the classical prediction intervals for linear regression combines an estimate of epistemic uncertainty (from lacking information) and an estimate of aleatoric uncertainty (from noise).

Most safety-critical applications involve more complex predictions. A medical image system may seek to predict the location, surface shape, and volume of a tumor. A traffic management system may wish to predict the volume and speed of traffic at each location in the road network. A self-driving vehicle may wish to predict the future trajectories of each of the other vehicles around it (plus those of the bicyclists, skateboarders, and pedestrians). It seems that for each new problem, new representations of uncertainty must be developed. A pragmatic approach is to represent the prediction as a d-dimensional vector and represent uncertainty as a d-dimensional multivariate Gaussian distribution. However, this is often a poor fit to the joint distribution of the predictions.

Research Gap 6: A general methodology is needed for representing uncertainty over complex predictions.

Once a representation for uncertainty is chosen, it needs to be calibrated with respect to the post-deployment distribution. Parametric calibration defines a parameterized mapping between the “naive” uncertainty representation (e.g., a real-valued prediction

SOURCE: A.N. Angelopoulos and S. Bates, 2021, “A Gentle Introduction to Conformal Prediction and Distribution-Free Uncertainty Quantification,” arXiv abs/2107.07511.

interval, an ellipsoid of a joint Gaussian) and a calibrated uncertainty representation. For example, Platt scaling is a method for mapping an initial Bernoulli probability (for a binary classification task) into a calibrated Bernoulli probability by passing it through a 2-parameter logistic regression model.7 Temperature scaling extends this to the discrete distribution over K outcomes.8 Because these calibration mappings are low-dimensional, very little calibration data are required to tune them, and tuning can be conducted online if ground truth is available. For example, the ground truth trajectories for cars and pedestrians are available within a few seconds after the prediction is made. Calibration for more complex uncertainty representations is currently done on an ad hoc basis.

Research Gap 7: A general methodology is needed for calibrating complex uncertainty representations.

4.6 VERIFICATION, TESTING, AND EVALUATION OF MACHINE LEARNING–ENABLED SYSTEMS

In the rapidly evolving landscape of ML-enabled systems—ensuring their reliability, safety, and performance—demands robust verification, testing, and evaluation methodologies. Verification involves validating that the system meets specified requirements and behaves as intended, while testing aims to identify potential vulnerabilities and assess system responses under various conditions. Evaluation encompasses a holistic assessment of system performance, addressing factors such as accuracy, safety, and robustness. Furthermore, new metrics are also needed to assess not only the performance of ML algorithms but also their impact in different applications and scenarios. This section delves into the critical aspects of these aspects for ML-enabled systems.

Verification of Machine Learning–Enabled Systems

The intersection of ML and safety-critical systems introduces a myriad of challenges in the verification and scaling of ML components. Research initiatives such as the Defense Advanced Research Projects Agency (DARPA) Assured Autonomy program have played a pivotal role in advancing the verification of ML-enabled systems. Algorithmic advances are crucial for designing and verifying robust ML systems, with a focus on uncertainty

___________________

7 J.C. Platt, 1999, “Probabilistic Outputs for Support Vector Machines and Comparisons to Regularized Likelihood Methods,” Pp. 61–74 in Advances in Large Margin Classifiers, A.J. Smola, P. Bartlett, B. Schoelkopf, and D. Schuurmans, eds., MIT Press.

8 C. Guo, G. Pleiss, Y. Sun, and K.Q. Weinberger, 2017, “On Calibration of Modern Neural Networks,” arXiv preprint arXiv:1706.04599.

quantification, real-time safety assessment, out-of-distribution event detection, and formal verification.

A range of verification approaches has been developed to verify the output of neural networks and systems featuring ML components or systems with ML components.9,10 In recent years, competitions have arisen to gauge the efficacy of these methods, with a specific emphasis on the scalability of verifying deep learning components, the accuracy or soundness of the approaches employed, and the computational speed. These endeavors serve as benchmarks to evaluate and propel advancements in the field of verification.

The current state of the art in verifying neural network components, as least as far as the VNN11 competition is concerned, is the approach proposed by Zhang et al.12 While this is a significant advance, these approaches are benchmarked on small data sets such as MNIST, CIFAR-10, CIFAR-100, and TinyImageNet. In other words, current verification methodology is orders of magnitude behind current state-of-the-art perception models.

Neural network verification therefore presents serious challenges for the research community. Firstly, verifying small yet weakly regularized networks demand an exceptionally precise analysis, which can still be intractable with existing methods. Secondly, scaling precise techniques to even medium-sized networks with a substantial number of neurons, like small ResNets on data sets such as CIFAR-100 or TinyImageNet, proves challenging due to the exponential scaling of costs with required split depth in branch- and-bound algorithms. Thirdly, extending verification to large networks (e.g., VGGNet 16) and data sets (e.g., ImageNet) with dense input specifications necessitates memory-efficient implementations.

Furthermore, verification beyond robust classification may be more important in safety contexts. While such specifications are useful for certain applications (e.g., protecting against malicious security threats), in most applications where real-world distribution shifts (e.g., change in weather conditions) are more relevant, these often cannot be described via a set of simple image perturbations. Such real-world distribution shifts could leverage generative models to learn perturbations followed by verification. This approach could provide meaningful verification under specific environmental assumptions (or shifts). Promising work in this direction includes Wu et al.13

___________________

9 G. Katz, C. Barrett, D. Dill, K. Julian, and M. Kochenderfer, 2017, “Reluplex: An Efficient SMT Solver for Verifying Deep Neural Networks,” Computer Aided Verification: 29th International Conference, CAV 2017, Heidelberg, Germany, July 24–28, 2017, Proceedings, Part I 30, Springer International Publishing.

10 R. Ivanov, J. Weimer, R. Alur, G.J. Pappas, and I. Lee, 2019, “Verisig: Verifying Safety Properties of Hybrid Systems with Neural Network Controllers,” Proceedings of the 22nd ACM International Conference on Hybrid Systems: Computation and Control.

11 VNN, 2023, “4th International Verification of Neural Networks Competition,” VNN-COMP’23, https://sites.google.com/view/vnn2023.

12 H. Zhang, S. Wang, K. Xu, et al., 2022, “General Cutting Planes for Bound-Propagation-Based Neural Network Verification,” Advances in Neural Information Processing Systems 35(2022):1656–1670.

13 H. Wu, T. Tagomori, A. Robery, et al., 2023, “Toward Certified Robustness Against Real-World Distribution Shifts,” 2023 IEEE Conference on Secure and Trustworthy Machine Learning (SaTML).

Research Gap 8: Although there has been progress in verifying properties of neural networks or learning-enabled systems, critical research is still needed to advance scaling, and to pursue novel (beyond scaling) approaches.

Special attention is warranted for large language models (LLMs), which present challenges in this context. It is not feasible to scale existing verification methods to provide meaningful safety guarantees for foundation models. Furthermore, the inherent opacity of some foundation models, often considered out of reach for comprehensive verification, necessitates new paradigms, embracing a “trust but verify” approach. This perspective is especially critical as safety-critical systems increasingly interact with nontransparent foundation models.

Testing and Evaluation of Machine Learning–Enabled Systems

Test and evaluation (T&E) methods and practices are critical to fully realize the promise of using ML in safety-critical systems. T&E tools and processes are intended to generate the necessary evidence that provide assurances that both system components and integrated systems can and will work as expected when deployed in the real world. Robust and effective T&E methodologies are especially important for safety-critical systems to ensure that systems operate without causing harm to life, property, or the environment.14

While it is obvious that robust T&E methods and practices are critical for ML-enabled safety-critical systems, there are major gaps in both the science and practice of T&E for both ML components and integrated systems. The current focus in much of the ML research community is on the evaluation of ML models (i.e., components) and is usually focused on only accuracy for the specific task.

For ML model or component evaluation, there is a need to move beyond accuracy to better understand and evaluate models across a wider set of criteria such as computational performance, transparency, robustness, security, and safety.15 For safety-critical systems, ML components will be integrated into larger, more complicated systems, and these other engineering qualities beyond task accuracy will have a significant effect on other components of the systems. In addition to evaluating across a broader set of engineering qualities, there is also a gap in both the science and practice of understanding the trade-offs among different qualities.

Recent work on Holistic Evaluation of Language Models (HELM) is an example of both evaluating beyond only accuracy and assessing the trade-offs across multiple

___________________

14 National Academies of Sciences, Engineering, and Medicine, 2023, Test and Evaluation Challenges in Artificial Intelligence-Enabled Systems for the Department of the Air Force, National Academies Press, https://doi.org/10.17226/27092.

15 V. Turri, R. Dzombak, E. Heim, N. VanHoudnos, J. Palat, and A. Sinha, 2022, “Measuring AI Systems Beyond Accuracy,” arXiv preprint arXiv:2204.04211.

SOURCE: P. Liang, R. Bommasani, T. Lee, et al., 2022, “Holistic Evaluation of Language Models,” arXiv abs/2211.09110, https://arxiv.org/abs/2211.09110. CC BY 4.0.

qualities of LLMs.16 HELM evaluates LLMs across accuracy, calibration error, robustness, fairness, bias, toxicity, and inference time and offers an analysis of the correlations of model behavior across these properties (Figure 4-2). While LLMs are not yet ready to be used in safety-critical applications, the HELM approach is an initial example of the types of evaluation practices required for ML models that will be used in safety-critical systems.

Evaluation of individual ML models is considered learner-centric evaluation—assessing the quality of the learning algorithm that produces the model. This is contrasted with application-centric evaluation—assessing the performance and behavior of an integrated system (including ML components) in the context of the deployment environment (e.g., the real world). While there is a need to expand and improve learner-centric evaluation methodologies, it is well known in both the ML and systems engineering communities that the gap is even larger for application-centric evaluation practices.17

Application-centric evaluation spans the life cycle of ML-enabled systems. A simple version of the system life cycle is to consider three phases of the system: development, integration, and deployment, and operations. The learner-centric evaluation approaches

___________________

16 P. Liang, R. Bommasani, T. Lee, et al., 2022, “Holistic Evaluation of Language Models,” arXiv preprint arXiv: 2211.09110.

17 B. Hutchinson, N. Rostamzadeh, C. Greer, K. Heller, and V. Prabhakaran, 2022, “Evaluation Gaps in Machine Learning Practice,” Association for Computing Machinery, https://doi.org/10.1145/3531146.3533233.

described above are useful in the development phase of the system life cycle. Unfortunately, there is little in the academic literature, standards, or engineering best practices concerned with evaluation of ML components or systems in the integration and deployment phase or the operations phase.18 Approaches to evaluation in the operations phase is particularly challenging, as there is a need for continuous evaluation to ensure that the ML components are continuing to behave as expected. Finally, system life cycles are iterative and incremental which requires new methods for managing change, including changes to data for training models, model parameters, system integration and configuration, and deployment environment where small changes can have very large effects on system behavior.19

Finally, the R3 concept of reliability, robustness, and resilience is useful for understanding the current state of both learner-centric and application-centric test and evaluation methodologies.20 Simply put, reliability is the system’s ability to perform well under controlled and expected conditions. Robustness is the system’s ability to continue to perform well under off-nominal conditions (e.g., slight shifts away from the distribution of the training data in ML). Resilience is the system’s ability to perform or recover under unexpected or unpredicted conditions. The ML community has focused primarily on learner-centric reliability and has started to focus more on learner-centric robustness in recent years, while resilience is largely unaddressed. Application-centric evaluation approaches for ML-enabled systems are largely a green field.

Research Gap 9: Research is needed to develop application-centric evaluation methodologies for reliability, robustness, and resilience across the system life cycle.

Metrics for Assessing Machine Learning–Enabled Systems

Metrics associated with safety-critical systems are diverse and unique to the safety specification for the system. There are typically metrics that can be directly related to a software component—for example, inherent failure rates, system availability, validation or test coverage, or requirements traceability. The incorporation of an ML component in a safety-critical system introduces new challenges to developing effective metrics either for the software component or for broader system safety performance.

First, ML models’ inherent opacity makes it difficult to pinpoint why a model performs well or poorly. It’s not always clear which specific aspects of the data may

___________________

18 J. Chandrasekaran, T. Cody, N. McCarthy, E. Lanus, and L. Freeman, 2023, “Test & Evaluation Best Practice for Machine Learning-Enabled Systems,” arXiv preprint arXiv:2310.06800.

19 M.L. Cummings, 2023, “What Self-Driving Cars Tell Us About AI Risks,” IEEE Spectrum, July 30, https://spectrum.ieee.org/self-driving-cars-2662494269.

20 G. Zissis, 2019, “The R3 Concept: Reliability, Robustness, and Resilience,” IEEE Industry Applications Magazine 25(4):5–6, https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=8732037.

be influencing the model’s behavior or that of the system. The model performance or interpretability results could be driven by model architecture, training methodology, or unique properties of the training or real-world data. The scale or complexity of a model and training data make it difficult to support the essential explainability and traceability of the model or system outcomes. These are key attributes for the safety system development process where the system metrics are expected to directly relate to model performance metrics, which is an active area of research.

Second, unlike benchmarks, which are a set of data points for ML model performance comparison, safety system metrics are focused on measuring or controlling an essential operational variable or outcome, while controlling for as many confounding ones as possible. ML-enabled system accuracy is difficult to assess when operating in real-world environments with continuously evolving parameters. The challenge is exacerbated by the ML model’s hypersensitivity to input data, resulting in performance degradation—for example, image recognition failure in an industrial robot vision system or model hallucination in a medical diagnosis application. And of course, the system’s inability to recognize when it is operating in corner cases or outside a defined operational design domain (ODD) means that meaningful safety metrics are nearly impossible to develop for the ML model itself. Safety systems routinely incorporate cooperative or supplemental safeguards to offset component deficiencies, but these may not fully address ML model degradation or recognition problems.

Research Gap 10: Research is needed to develop metrics and guardrails for specific performance variables, operating scenarios, and tasks. Generalized performance metrics are not generally sufficient for evaluating safety.

4.7 RISK AND MITIGATION STRATEGIES FOR MACHINE LEARNING IN SAFETY-CRITICAL APPLICATIONS

ML supports two key functions in safety-critical systems:

- Perception (computer vision). Self-driving vehicles rely on cameras, lidar, radar, and related technologies to sense the environment. ML is by far the most successful method for creating perceptual systems that analyze these data to detect and localize people, animals, other vehicles, stationary obstacles, and so forth. Additional learning components predict how these entities will move. These perceptual systems are typically trained on images and video that have been labeled by human workers.

- Planning and control. ML, combined with classical techniques from ML and control engineering, can create systems for real-time path planning and control. These systems are usually trained via reinforcement learning applied first in simulation and then transferred to real-world robot platforms.

As these functions are critical to the safe operation of the system, their risks must be understood.

To apply ML in safety-critical systems, methods are needed for quantifying and controlling these risks. These are important and open areas for research exploration. For example, can one develop a methodology for task analysis that can place bounds on the degree of distribution shift that the system will encounter? Is it possible to measure the extent to which the fitted model generalizes systematically over the input space? Can one develop methods that can characterize the dimensions of variability in a data set and identify dimensions along which there is insufficient variability? Is there a methodology for estimating the number of novel object classes that are not present in the training data?

To control these risks, can one develop learning approaches that are robust to distribution shifts, perhaps by fitting causal models to the data? These approaches might require additional task analysis by ML engineers to design the space of causal models. To address systematic generalization, can one detect failures of systematicity and collect (or generate) new data points to repair those failures? Can one develop methods for detecting and accommodating novelty? Novelty detection only succeeds if the system has discovered features such that novel objects have a representation that is distinct from the representations of the known objects. Hence, this depends on having sufficient variability in the data. What methodologies can increase variability where it is insufficient and hence reduce the risk that novel objects will exhibit unmodeled variation? For example, if one trains on massive collections of images from unrelated tasks, how well can this reduce the novelty detection risk? Can variability be introduced artificially using generative models of images?